Bioprocessing is full of legacies. Our remote ancestors discovered fermentation: microbial magic that transformed fruit to wine and grain to beer.

Building on the work of Edward Jenner and others, Edward Ballard systematically reinfected cattle to make vaccines. Louis Pasteur revolutionized both fermentation and vaccination by showing that different microbes caused fermentation and spoilage (saving wine and beer production from disastrous batch contamination), establishing the germ theory of disease, and using that knowledge to develop new vaccines against endemic infections.

Another legacy (from Paul Ehrlich’s “magic bullet” concept) led to development of horse antitoxins — and later, antibody therapeutics. Deep-tank fermentation of penicillin mold enabled commercial-scale antibiotic production just in time for World War II. Separation of plasma from blood enabled battlefield transfusions, and after the war, extraction of proteins from plasma produced breakthrough treatments for hemophilia. The massive post-war public inoculation campaigns against polio used vaccines from monkey kidney tissue cultures.

The development of recombinant DNA technology made possible large-scale production of therapeutic proteins with bacterial and mammalian cell cultures, supplanting older techniques for extracting proteins from blood and making vaccines in animal tissues as well as launching a whole new category of magic-bullet medicines: monoclonal antibodies.

Legacy system is a term of art in computer technology, but the concept is useful in all industries that use complex technologies with high fixed costs so that installed infrastructure has staying power. A legacy system is an old technology, process, method, or installation that persists in use despite the availability of newer/better technologies, processes, and methods because replacement is too costly or reckoned cost ineffective. Additional factors freeze legacy systems in place: organizational inertia, regulatory burden, and the need to keep a business running all day, every day on a big “installed base” (as in airlines and finance).

Bioprocess engineers don’t often think in terms of legacy systems. As Alex Kanarek (senior consultant at BioProcess Technology Consultants Inc.) asked, “What do you consider a legacy system? I’ve only heard that phrase used in the sense of computer systems that predate the 21 CFR 11 regulation and were considered relatively unvalidatable.” Others I interviewed pointed out that nearly everything in bioprocessing is a legacy, especially fermentation, CHO expression, protein A, and paper documentation.

This survey of key legacy biomanufacturing systems — technologies, processes, and materials — focuses on therapeutic protein manufacture using cell-based systems (mostly Chinese hamster ovary or CHO cells, which produce >70% of today’s biologics). Although biotechnology could not exist without nearly 200 years of vaccine research, nothing could be more different than the empirical origins of vaccination and the scientific foundation of modern biotechnology. Vaccines are both the oldest and the most conservative of the biologics, with a complex history and a rapidly changing present that merit a separate article. Here, vaccine production is mentioned only in passing.

Most bioprocess facilities still use the same basic hardware and wetware that were developed for the first generation of bacterial fermentors: stainless steel tanks of liquid nutrient media, in which clones of genetically engineered cells produce a protein of interest, which is separated from the broth using primarily chromatography and filtration, and finally packaged for shipping in fill and finish operations with their own legacy systems. In this sense, biomanufacturing has been around a human generation or so (~30 years), less than information technology (IT), in which a few encapsulated legacy systems date back to the early 1970s (e.g., at NASA, banks, and airlines). But as Kanarek’s comment suggests, biomanufacturing is becoming increasingly data dependent. The IT systems that support quality and process analytics, document manufacturing results, and store records for regulators share the legacy issues of all IT systems.

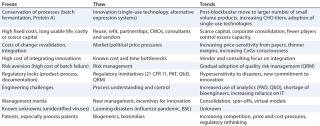

Forces and Trends That Freeze and Thaw Legacies“Don’t touch it unless you have to” is a nearly universal engineering maxim, as is its financial counterpart: “Don’t spend unless you have to.” And bioprocess engineers are more averse to change than those in other industries. The sheer difficulty of working with living systems makes bioprocessors extraordinarily likely to stick with the first approach that works reproducibly, produces a safe product, and satisfies regulatory authorities. That’s not a bad thing, but such contingencies can be a powerful force for freezing systems in place. And the downside of sticking with legacy systems can be considerable: bottlenecks that increase cost of goods sold (CoGS), barriers to potentially cost-saving innovation, inability to respond quickly to disease outbreaks, downtime, and other losses, especially when no one on staff understands a legacy system for repair or recovery.

With the technical challenges of genetic engineering, cell expression, and protein purification — and the need for what Laurel Donahue-Hjelle (business leader in cell culture at Life Technologies, Inc.) calls “robust, reproducible processes” — it should be no surprise that the biotherapeutics industry is characterized by conservation of processes. The first approach to work well enough often becomes standard: e.g., batch fermentation, CHO cell expression, and protein A affinity chromatography.

Bryan Monroe of Primus Consulting provides some historical perspective. “When I started in the industry in the mid-1980s, it was very wide open. There was just not a lot of standardization. Now, here we are 30 years later: There’s a lot of standardization, but often that’s just ossification. For example, in the [influenza] vaccine industry we see 50 years of using chicken eggs because it’s the established process. But it’s incredibly expensive, slow, and not very efficient.”

Cost is the defined reason that legacies persist. Some systems are considered to be too expensive to change. Nevertheless, cost is a slippery concept that reflects multiple financial and economic considerations interacting with other forces. In an ideal world (MBA school), return on investment (RoI) for a fixed asset is calculated rationally using total cost of ownership (TCO) over the expected life of the asset, discounted to the present at a realistic cost of capital and adjusted for depreciation at a rate that reflects replacement cost. Once in operation, variable CoGS calculations would reflect manufacturing efficiencies and their contribution to a product’s profit margin. All that sounds fine — but the devil is in the details: the assumptions, estimates, and guesses that engineers and accountants choose to fill in those equations. In the real world, cost can also be a proxy (excuse) for other factors that freeze or thaw systems.

High Fixed Costs and Long Life Spans: Building a greenfield biomanufacturing facility and equipping it with a typical blockbuster-era “four-pack” (four 15,000-L bioreactors) for commercial-scale production would cost $700–800 million. Another $100 million/year would be needed “just to keep it warm, in do-no-harm mode,” says Andy Skibo (EVP operations, MedImmune). By the nature of concrete and steel, as Kanarek commented, “the usable life of a fermentor system can be 25 years if you look after it. In that time, of course, you hope to regain all the costs of installing the equipment in the first place.” The challenge is risk. Although a successful product can pay for its own plant in months, facilities must be built and validated before phase 3. If a product fails, then it recovers none of its ma

nufacturing costs. Unless the next product in a company’s pipeline can move right in, the result can be downtime or a mothballed plant. Facilities built for products that ultimately failed have contributed significantly to overcapacity.

Table 1: Forces that freeze and thaw processes and examples

Costs of Change: The immediate cost of replacing a process looms large in financial decision-making, not just because a dollar today is worth more than a dollar tomorrow. These costs are not easy to estimate. In addition to the price of new equipment, they include regulatory revalidation expenses and the cost of integrating new technology with installed systems, as well as the often-ignored risk-adjusted cost of failure if things go badly. So it is easy for the default position to be “if it ain’t broke, don’t fix it.”

Cash Constraints: For companies developing products that take years to reach the market (with a high risk of failure along the way), cash is an especially scarce resource. Unless a small, venture-funded biotech finds a way to generate its own revenues, cash is a burning fuel between trips to the financial markets — and such capital is expensive, if available at all (1). Even at the biggest pharmaceutical companies, cash is under budgetary constraint, and manufacturing is a “cost center” competing with other departments (regulatory, marketing, legal, public affairs) for corporate funds. Cash constraints tend to favor the smallest necessary changes in installed, functional systems.

Scale: For billion-dollar products, the variable cost of manufacturing is a relatively small contributor to CoGS compared with R&D, clinical trials, regulatory compliance, legal and intellectual property (IP) costs, and marketing. During the blockbuster era, fat margins and huge revenue streams put little pressure on making changes to installed systems for cost reduction. But big batches equal big risk, the cost of losing one batch being so high that it affects risk assessment and cost calculations.

Now, an ongoing shift from blockbusters toward niche biologics (and personalized treatments) is already changing the cost equation. Along with the capacity glut and the rise of outsourcing, that makes for better RoI from refitting, automation, improved process control systems, single-use technologies, and flexible factory design.

Back in 2000, assumptions about trends in expression titers combined with the blockbuster mentality to produce upstream-heavy facilities. Skibo says, “A generic plant was four 15,000-L bioreactors, very heavy on fermentation and light on downstream purification — big battleships that might make the same product 12 months’ straight, or two products at most.” Both assumptions are gone now, so “in 2020, instead of two or three, we’ll have 10 or 12 products, not just our own [at MedImmune], but sharing capacity with our partner Merck.”

Economic Environment: In the inflationary 1980s, biotech was young, and venture capital flowed over from high-tech. Interest rates were on their way down from record highs; furthermore, before 1986, depreciation tax treatments were generous discounts against higher tax rates. Under those conditions, the net present value (NPV) of building, e.g., a recombinant insulin plant — such as the first 40,000-L Humulin fermentor built by Eli Lilly under contract with Genentech (2) — was much lower than in today’s environment of record-low interest rates and scarce capital. Real costs of building de novo have also risen, including the costs of compliance with environmental and other regulations, local real estate and operating tax rates, and all documentation and validation necessary for FDA certification, which Bryan Monroe referred to as “the GMP footprint cost.”

Markets and Politics (Payers, Prices, and Patents): During the blockbuster era, healthcare payers were less price sensitive, especially to breakthrough biologics for diseases with unmet needs. Changes to Medicare/Medicaid reimbursement, the rise of provider formularies, and other factors have put increasing price pressure on biologics manufacturers even as lower lifetime product revenues make producers more sensitive to manufacturing costs. Meanwhile, a generation of biologics is going off patent. With emerging competition from biosimilars, innovators will become even more cost-sensitive. Although regulatory and IP issues are still murky, new companies may be better positioned to experiment with process changes that significantly reduce costs as some established manufacturers (e.g., MedImmune and Merck) are gearing up to make biosimilars of their own and under contract.

A Powerful Driver for ChangeThreats are more powerful than opportunities. Epidemics have spurred changes in bioprocessing: e.g., the systematic development and institutionalization of cowpox vaccination against smallpox invented the concept of vaccination. Global polio epidemics in the early 20th century prompted vaccine research, but it took major US outbreaks in 1952 and 1953 to mobilize the millions of dollars in R&D that led to two vaccines and mass inoculation. And it took a looming global influenza pandemic in 2009 to push the flu vaccine industry and its regulators out of 50 years of a legacy chicken-embryo system toward recombinant cell-based systems.

Despite an admirable historical safety record, however, the threat of adventitious viral contamination remains an inherent risk of using living cells as drug factories. The many potential sources of contamination include the cell lines themselves and animal- and plant-based culture media ingredients (with their own global supply issues), all complicated by the “known unknowns” of undiscovered and unidentified viruses. So processes that have successfully prevented contamination tend to freeze until something forces them to change. For example, the use of animal products in culture media was a legacy system until a number of contamination episodes around the turn of the 21st century — e.g., porcine circovirus in rotavirus vaccines and the highly publicized transmissible spongiform encephalopathies — caused bioengineers to seek less risky substitutes.

Consolidation, Capacity, and Concentration: At >30 years old, the biotherapeutics industry is now mature. Although interest rates make capital cheaper than it was in the 1980s, fresh money is less available than ever, especially to new companies. To improve margins and corporate RoI as the blockbuster era wound down, big pharmaceutical companies acquired innovative biotech products and their cash-strapped developers. Today only Amgen remains of the first generation of independent, fully integrated biotech companies. In a pattern familiar to other industries (e.g., banking), the biggest biomanufacturers acquired smaller ones and sometimes each other. Consolidation has churned nearly 300,0000 people through layoffs and plant closures, even as the first generation of bioprocessing experts is nearing retirement (3).

Meanwhile, unanticipated improvement in CHO titers since 1999

has produced a glut of large-scale upstream capacity, shifting the geographic distribution and concentration of biomanufacturing capacity. The United States still has by far the most cell culture capacity — nearly twice that of Europe and four times that in Asia (4). Still, there is less active capacity on the west coast and more in the old industrial pharma heartlands of the east. The top 10 pharmaceutical companies control ~75% of global mammalian cell capacity, but they use <50% of their available capacity (5). So older facilities are more attractive for reuse as in-house or contract capacity or to sell off to independent contract manufacturing organizations (CMOs). That along with other factors (e.g., demand for same–time-zone capacity, single-use technology, trusted partner arrangements, and contracting out) has made US domestic capacity increasingly competitive with offshore outsourcing.

The rise of offshore outsourcing and new global markets has also contributed to cost sensitivity. Not long ago, the idea of globalization conjured a pipe dream of low-cost biofactories in Asia supplying multinationals based in California, New Jersey, and Switzerland. But changes in capital flow, political and regulatory pressures, excess large-scale capacity, and the need for coordination across time zones is driving companies to prefer local capacity. Emerging geographic markets for biologics (especially vaccines) makes some facilities built as offshore outsourcers into suppliers for their own local markets — not only in Asia but also in eastern Europe and South America.

By increasing the potential for pandemics, globalization has begun to thaw some vaccine legacies, most dramatically in flu vaccine production and regulation. Likewise, the growth of global markets for low-cost, low-margin vaccines has encouraged innovation in their development, production, and regulation. Global sourcing of upstream inputs (especially culture media) has forced formulation changes and challenged once-trusted legacy wholesale relationships in the supply chain. Cross-border disasters such as the 2008 heparin contamination triggered regulatory reactions with unintended consequences on the global drug supply.

Nevertheless, outsourcing noncore capability is a long-term industrial trend beyond biomanufacturing. IT is the most visible example, in which unintended consequences of offshoring, downsizing, and contract labor have made contractors, consultants, and professional services permanent parts of the landscape. Although bio-offshoring has not grown to that extent (nor as much as predicted 10 years ago), outsourcing “remains the dominant model for small, especially venture-funded biopharma companies.” according to Tom Ransohoff (VP and senior consultant at BioProcess Technology Consultants).

Changing roles of CMOs, vendors, and consultants also illustrate long-term changes in design and operation of bioprocesses and facilities. Thirty years ago, emerging companies built their own bioprocess capacity, each expecting to grow up to be like Amgen or Genentech. Today, even though some such greenfield construction is still going on, the favored business model for founders and financiers is a “virtual” company outsourcing as much R&D as possible. That has contributed to increasing demand for CMOs while the glut of upstream capacity in geographically desirable locations has made it cost-effective for them to buy and refit old facilities.

Ransohoff says, “We used to just consider make-versus-buy: Build a facility and make the product yourself, or buy the product from a contract manufacturer. Now, it’s make-versus-buy-versus-acquire because there is so much existing capacity, and depending on the type of facility or capacity you need, there may be a good fit already out there on the market.”

He says that CMOs are “becoming more technically sophisticated as the industry matures,” evolving from contractors that closely follow customer “recipes” to trusted partners providing expertise, process design, and consulting support. They are becoming an increasing source of innovation, “starting to drive adoption of new technologies where they make sense.”

Consulting itself has become a more important part of the business as the first generation of seasoned experts have founded professional service organizations or become available as freelancers. Consulting offers an alternative position for engineers laid off because of consolidation or who are at a stage in their careers at which part-time and/or project work is appealing.

Vendors too, are offering more specialty expertise. Ransohoff calls them “a great source of technical knowledge, particularly about their particular product areas and field.” As Monroe explained, “Where vendors have been helping the industry move forward is when they come in with ‘carpool’ ideas. Their new technology doesn’t get you off the paved road, but it gets you into the HOV lane so you can move faster.”

The success of single-use technologies has been fueled by inventive vendors such as Xcellerex, PBS Biotech, and others that sell design and integration expertise (“solutions”) as much as they sell equipment. Today in IT, the big hardware and software vendors (IBM, Cisco, Microsoft) mostly acquire innovation by buying small, creative vendors and integrating (not always smoothly) their products into their own product lines. A similar pattern may be emerging in biomanufacturing — as with GE acquiring Xcellerex, for example, and with companies such as Life Technologies and Thermo Fisher conglomerating multiple smaller product vendors to offer broad ranges of products, services, and consulting.

People are the most important legacy in any industry. No matter how thoroughly documented procedures and processes are, they require expert engineers as operators, and expertise requires experience. But industry consolidation and consequent layoffs have churned staff and shifted the geographic distribution of facilities. Furthermore, the move from big-volume blockbusters to more products at smaller scales increases the need for bioengineers even as many Asian engineers trained in the United States and Europe are returning home to build their own region’s biopharmaceutical industry.

Offshore outsourcing has not had the deleterious effect on bioprocessing that it has had in some heavily IT-dependent industries. For example, cycles of offshoring, layoffs, and hiring based on cost have left financial service firms with practically no legacy expertise in house, so they rely on outside consultants to provide continuity. Nonetheless, as the first generation of bioengineers reaches retirement, a potential shortage of expertise is building.

Eric Langer (president and managing partner of BioPlan Associates) reported on his company’s industry survey in Life Science Leader for July, 2012 (6). The top factor “likely to create biopharmaceutical production capacity constraints in five years… cited by 27.7% of respondents, was ‘inability to hire new, experienced technical and production staff.’ Inability to retain this staff was [the next most-cited factor,] noted by 22.6% of respondents.”

Keeping staff has thus become a priority for companies with legacy facilities, especially when they make “divest, keep warm, or refit?” decisions. Skibo described the best-case scenario this way: “Thanks to our Merck arrangement, we’ve added over 100 headcount of MedImmune employees at Frederick, MD, in the past year, and we will probably add another 50 this year. This is a complete reversal of what the situation would have been if we had decided to hold the plant in ‘keep-warm’ mode and lay off much of the staff.”

The large number of layoffs (~300,000 in the pharmaceutical industry since 2000)

has not made more bioengineers available (7). Langer writes (6), “Although production and process professionals… account for almost three in five hires over the next five years, that doesn’t mean they are easy to find. Indeed, while there has been a steadily increasing demand for scientists with operations and process engineering backgrounds, there has not been an increase in the number of scientists moving into these fields. When asked which job positions they were finding difficult to fill, most survey respondents cited hiring of process development professionals. “This was especially true,” writes Langer, “in the case of upstream process development” (6).

He writes that budgets for hiring are up, “and biomanufacturers and CMOs alike are recognizing the productivity and efficiency benefits of hiring employees with the right skills.” However, no major changes are made to fill these vacancies. Such skilled employees are often cultivated through internal training, which leads to companies “poaching” from one another.

As in other industries going through consolidation and layoffs, bioprocessors are relying more on the outside sources of expertise. Individual consultants and professional service consulting firms and the growth of specialized experts in CMOs are building a reservoir of technical leaders who can augment in-house staff. Such “trusted partnerships” may help transition the industry from legacy blockbuster facilities to flexible factories.

Security Legacies: Until recently, physical security was not a major concern for biopharmaceutical developers; a need for sterile facilities and operations had the fortuitous side effect of providing considerable physical security around biomanufacturing buildings. However, recent news has increased the industry’s awareness of a growing problem of organized large-scale burglary from pharmaceuticals warehouses and shipments (8). Although theft rings don’t specifically target biologics, they are frequently included in a haul. Stolen drugs can reenter wholesale distribution channels with no attention to careful cold-chain management, which exposes the public to risks, as with a 2009 incident in which diabetics received ineffective insulin. The legacy system of presumed trustworthiness in drug wholesalers is now under scrutiny. The same corrupt wholesalers that pay for stolen drugs also channel “gray-market” and counterfeit drugs, with potential risk to brand reputations as well as patients.

At the other end of the supply chain, misrepresented or outright counterfeit inputs are a growing risk to biomanufacturers as well. The 2008 heparin scandal is the most visible example of a breakdown in the legacy assumption of trust throughout the supply chain.

Time Zones: Time may equal money, but it is never on anyone’s side. Time is built into systems that use living organisms or cells such that associated constraints are rarely questioned. “We have just accepted that CHO cells double every 24 hours,” Monroe points out. “What if they doubled every 18 hours? That takes six hours per day out of your process; over a 14-day process, you’ve just eliminated two days of expense.”

With years-long R&D timelines, there is less pressure to speed up bioprocess development, which is not usually a limiting factor on the critical path to market. Matching the timing of clinical trials with that of bioprocess development is an ongoing concern. Susan Dana Jones of BioProcess Technology Consultants says, “It’s safe to change production systems up through the end of phase 2, but you really should go in to phase 3 with your intended commercial production system.” The length and expense of clinical testing dwarfs savings from more efficient bioprocesses. With the patent clock ticking down, any change in manufacturing after product launch risks further loss of precious time.

Offshore outsourcing of biomanufacturing was until recently deemed inevitable. But major unanticipated operational difficulties in coordinating bioprocesses 12 hours away from headquarters have increased the demand for CMOs with operations in the same time zones as their customers. That spurs refitting and reuse of legacy factories, especially those operating on US Eastern Time.

The TechnicalDesign SpaceThere is no shortage of new ideas and inventions in biomanufacturing. Just look at the hundreds of alternative expression systems, with dozens listed recently by Langer (9). These include whole-organism systems, bacteria, algal chloroplasts, protozoa, yeast angiosperm cells, insect cells using the baculovirus expression vector, human cell lines, and a number of cell-free systems. And yet, few have managed to challenge the dominance of CHO systems.

Donahue-Hjelle pointed out that academic research has a bigger potential process design space than biomanufacturing does. When she moved from an academic position into industry consulting, “the hardest thing to get used to was to innovate not using all the technology available, but within a confined design space of what regulatory agencies had already seen or what was a small-enough step forward that they wouldn’t reject it. Sometimes clients would reject it because they didn’t want to be the first to take it through the FDA. They wouldn’t mind if somebody else did, but they would wait.”

Information Technology: Does biomanufacturing have its own IT legacies? This industry has a history of being a late adopter of computer technology and thus being unable to attract top-notch IT people. The latter is thawing as outsourcing and downsizing across the IT industries increase the supply of experienced IT professionals available. And the rise of bioinformatics, genomics, and systems biology have produced computational biospecialities that attract talented newcomers to learn both biological and IT skills.

Older biotech installations often run legacy instrumentation and process control systems that constrain the trajectory of scale-up. By contrast, de novo and refit flexible factories offer opportunities to install instrumentation that can scale up from 4 L to 15,000 L in one leap. Advances in IT over the past 20 years also support leapfrog jumps in process control. Skibo spoke of how MedImmune took advantage of building a new plant adjacent to an existing legacy facility by installing the latest automation, instrumentation, and process control systems in a flexible-factory design. That includes a duplicate system used for process shakedown and offline simulations for training legacy staff from the old facility.

Process analytical technology (PAT) and other analytic advances call for replacing legacy process control instrumentation and laboratory equipment. But such changes can affect current good manufacturing practice (CGMP) certification and pose daunting revalidation costs. Industry consultant and trainer Tom Pritchett says, “Upgrading hardware or software for an automated manufacturing system, if it’s a large enough change or upgrade, may require partial or even full revalidation of the computerized system. That’s a fairly high bar in the decision-making process of when to upgrade.”

Documentation: Paper documentation is a large and resistant legacy that illustrates the stubborn nature of barriers to electronic data adoption. 21 CFR Part 11 has been something of a two-edged sword — an attempt to thaw legacies that presents discouraging costs. The biotherapeutics industry still depends largely (~50% of developers) on paper-based documentation, especially in smaller and emerging companies. Ransohoff was among several interviewees who suggested that stems from fear regarding the cost and complexity of software validation. Also, when migrating from paper-based to software systems, biomanufacturers will encounter exactly the same suite of prob

lems that the IT and software industries have been dealing with for years. Software becomes obsolete faster than ever now, committing companies to future rounds of migration and revalidation.

Infosecurity: Increasing use of the Internet and cloud computing by multinational biopharmaceutical companies is exposing them to cyberthreats. Because the clinical trials community needs to keep data blinded (well aware of people in the financial community who can profit from premature release of data), it has been concerned with electronic security for years. This is a regulator issue as well, as illustrated by a 2011 FDA insider trading case and accidental public postings by a contractor regarding agency surveillance of a group of its own scientists (10).

RegulationThe traditional role of regulation has been to freeze processes — CGMP for biologics meant that “the product is the process.” As Kanarek explains, “Once you scaled it up to commercial scale, once you validated the process, once you demonstrated that it produces the specified product reliably, reproducibly, batch-after-batch, you have sufficient data to say to the FDA, ‘We know what is happening in this process and we are going to fix it like that.’ And when they give you the marketing license to sell the product, you are undertaking not to change the process without letting them know.” Or as Jones put it, “Whatever you use in phase 3 should be what you at least launch your initial commercial sales from.”

Process engineers and their business managers worry as much about changes in the interpretation or enforcement of rules as they do about changes to regulations themselves. “I’ve seen some 483s (observation of deficiencies) for Part 11 issues, but not very many, not recently,” reports Tom Pritchett. “Back in the late 1990s, you saw tons. So the FDA is laying low. But we’re only one tragedy away from FDA clamping down on Part 11 records; and when they do it nearly everyone will have a legacy GMP automation system.”

A Brief History of Risk (11)Gambling is at least as old as the Egyptians, who depicted games of chance on their tomb walls 5,500 years ago. Aristotle explains commodity options, and prototypes of shipping and life insurance were used in classical Greece and Rome. However, it was not until the Renaissance that the statistics of chance were calculated and studied. Over the next few centuries, gaming, financial, actuarial, and insurance interests advanced the understanding of probability and risk.

The Industrial Revolution invented new hazards. Steam-engine accidents led to the first US Federal regulations establishing safety standards and inspections in 1838. The Food, Drug and Cosmetic Act’s Delaney Clause (1954–1960), prohibited “carcinogenic” additives, illustrating the FDA’s historical attachment to a zero-tolerance, risk-aversion paradigm. By contrast, regulation of industrial exposure to hazardous chemicals used threshold limit values (TLVs) intended to prevent “unreasonable risk of disease or injury.” That represents a step in the direction of cost–benefit analysis.

Toward the end of the 20th century, the FDA launched a series of initiatives addressing risk in new ways. In the 1990s, it rolled out new guidance for food-processing hazards, promoting a risk-management method called hazard analysis and critical control points (HACCP). In 1997, the FDA migrated from medical device GMPs to quality system regulation (QSR) in harmony with emerging international standards. The agency would soon (2002–2004) expand that approach to pharmaceuticals under its QbD and quality risk management (QRM) initiatives.

Pritchett says that the fear of potential regulatory changes (rules or interpretations) can freeze risk-averse management into waiting “until after the next inspection; if something gets flagged, then we’ll fix it.” Such an approach can miss incremental changes to current GMPs and result in “a manufacturer getting creamed because they didn’t keep up.”

As many historical horror stories remind us, unanticipated FDA changes are inevitable. One recent example is the agency’s reaction to the 2008 heparin contamination scandal, which triggered a shortage of low-cost drugs dependent on a small number of manufacturers for their supplies. Consider the paradox of FDA leadership advancing initiatives like quality by design (QbD) and continuous processing meeting a widespread industry perception that implementing such innovations for real products will face resistance from reviewers unfamiliar with them. Top-down initiatives from regulators, however, can trigger a thawing of legacies and prod the industry into new paths.

As a growing number of biologics come off patent, the regulatory challenges of biosimilars have triggered a reevaluation of the product = process paradigm in the context of biosimilarity. This is still a work in progress, with many details remaining unclear.

Process Knowledge and Risk ManagementDue to regulatory encouragement, risk management is a hot topic in biotech. More detailed discussions of this emerging approach to viral contamination, for example, can be found in three recent articles by Hazel Aranha of Catalent Pharma Solutions (12,13,14). This represents a thawing from the legacy position of risk-aversion to a more process-knowledge–centered approach.

In biomanufacturing, any contamination can lead to expensive batch losses, regulatory actions, plant closures, legal trouble, and harm to product/company reputation. And contamination can harm patients by causing shortages of limited-source drugs and even leading to disease or death from undetected toxins or pathogens.

Adventitious contamination has been a hazard throughout the history of fermentation. Traditional open containers exposed beer and wine to unwanted microbes that produced undesirable chemicals. Pasteur began his work on germ theory with a consulting engagement to discover the cause of failed batches of sugar-beet alcohol. Basic techniques (e.g., pasteurization and excluding outside organisms) to prevent and mitigate bacterial and fungal contamination were established decades before the advent of recombinant biotechnology. A tougher challenge has been preventing, detecting, and mitigating viral contamination, which is a special hazard in mammalian cell culture that was first confronted with blood-sourced biologics.

The problem of transfusion-transmitted disease was recognized early in the history of blood transfusion; donors were screened for syphilis in the 19th century. With the growth of paid donation and blood banking during World War II — and the consequent emergence of post-transfusion hepatitis — viral infections became a recognized hazard of receiving whole blood. That spurred research into identifying the viruses responsible. An antigen biomarker for hepatitis B was discovered in 1965, enabling blood to be screened in the 1970s. But it was 1989 before the hepatitis C virus was identified at Chiron using modern molecular biology techniques.

Bioprocess engineers were thus well aware of viral safety issues from the beginning, and they knew that unidentified viruses posed a threat. That was made tragically clear when the human immunodeficiency virus (HIV) entered the blood supply in the late 1970s, leading to the infection of thousands of hemophilia patients through contaminated blood products. An ensuing multinational scandal involving both corporate misconduct and regulatory malfeasance coincided with the initial period of growth in rDNA biomanufacturing and underscored the grievous consequences of viral contamination. (It also accelerated the move from products extracted from blood to those made through recombinant cell culture.)

“Initial approaches to ensuring v

irological safety of biologics were responsive and disaster led,” writes Aranha (12). Vaccines, too, have suffered multiple incidents of viral contamination, sometimes in products that reached the market (e.g., pediatric RSV and rotavirus vaccines). Causes include incomplete inactivation of live viruses, contamination of production systems, and contaminated excipients. Such incidents have not always caused harm, but public and media have zero tolerance for such hazards anyway, making any contamination incident damaging not only to individual vaccine manufacturers, but also the very concept of vaccination.

Through a combination of risk aversion and good fortune, no pathogenic viruses have yet been iatrogenically transmitted through biopharmaceuticals made by recombinant cell lines (13). That perfect record has reinforced conservation of processes and risk-aversion in bioengineering. But preserving that record has recently motivated manufacturers and regulators to adopt more sophisticated approaches to risk management. Such techniques have their roots in statistics used in finance for centuries, in civil aviation safety since the 1930s, and in other high-risk manufacturing industries (including small-molecule pharmaceuticals) in the ensuing decades.

Bioindustry’s evolving approach to preventing and mitigating viral contamination exemplifies the transition from a legacy attitude of risk aversion to a proactive risk management paradigm (14). Much credit goes to the FDA’s initiative: Several years of tentative guidance were codified in 2004’s Pharmaceutical CGMPs in the 21st Century — A Risk-Based Approach.

A number of factors make viral contamination a particularly challenging risk-management issue. The potential for harm is great; even when not deadly, virus contamination incidents are always expensive, high-profile failures. The risk-acceptance level is low: Search for “rotavirus contamination” online to see how negative news coverage, distressed parenting bloggers, and the anti-vaccination movement predominate. Although (or perhaps because) the statistical risk is low, preventing viral contamination is not easy. Potential sources include infection of master cell banks, contamination of bioreactors or other processing equipment, and viruses or fragments lurking undetected in animal and plant-based growth factors, reagents, and excipients. Newly emerging or currently undiscovered viruses are a perennial threat that global connectivity has increased at all points in the supply chain and manufacturing process.

Viral hazards are especially hard to detect. As Aranha put it, detecting viruses faces more limitations than bacterial contamination does. Even more than with other infectious agents, with viruses “there are known-knowns, there are known-unknowns, and then there are the unknown-unknowns.” The latter two make 100%, “no-threshold” safety probabilistically impossible. “The concept of ‘virus-free’ is only as good as our detection methods,” she continues. “Since you find only what you’re looking for, there’s no guarantee that you haven’t missed something. Absence of evidence is not evidence of absence.”

Thus, Aranha explains, “Current approaches are preemptive and focus on disaster avoidance. The emphasis is on knowledge management rather than simple data accumulation, efficient risk management and an overall lifecycle approach,” with continual improvement based on generating new information over time (12). Under the new paradigm, proactive risk mitigation comes to the fore: screening out viruses in cells, tissues, or blood as well as from materials used in media and other processes; incorporating virus clearance steps into manufacturing; and monitoring production with thoughtfully chosen panels of screening assays (13).

Such multilayered approaches can minimize hazards — but here too, the devil is in the details. Old cell banks may have outlived their historical documentation, and exposure of cell banks or other inputs to animal-derived materials may never have been documented at all. The awareness of unknowns does not in itself provide ways to detect them. Being exceptionally motivated to preserve a perfect record, the rDNA biomanufacturing industry and its regulators are obliged to follow the advice of JK Rowling’s Mad-Eye Moody from Harry Potter and the Goblet of Fire: “Constant vigilance!”

IntegrationPerhaps the topic of downstream processing has received short shrift herein. But so have the topics of people and documentation — all worthy of full articles in their own right. Another important issue that has not received its due is the challenge of integration. Many legacies have persisted in large part because they are well integrated within the established biomanufacturing design space; indeed, a large part of that design space is built around CHO culture and protein A unit operations. Integration is what ties novel and legacy processes together. And the most successful innovations (e.g., single-use technologies) facilitate integration with the rest of a system rather than demanding a complete reengineering of installed and familiar elements.

Author Details

Ellen M. Martin is managing director of life sciences for the Haddon Hill Group Inc., 650 Kenwyn Road, Oakland, CA 94610; 1-510-832-2044, fax 1- 510-832-0837;

REFERENCES

1/03/31/drug-theft-goes-big..