Process characterization (PC) studies are experiments performed primarily at laboratory scale to demonstrate process robustness and provide data necessary for planning, risk mitigation, and successful execution of process validation (1, 2). These typically involve extensive, multifactorial testing designed to determine the effects of operational parameter perturbations and raw materials on process performance and product quality (1, 2). Product-specific information from development studies may be used to help guide PC study design; however, such information may be limited or insufficiently substantiated before PC begins. PC studies thus may include many operating parameters that ultimately have little or no process impact but are included in PC study designs because prior knowledge is lacking. In addition, most design of experiments (DoE) used for PC are powered to identify two-way interactions, which may be rare or have no significant process effects.

Recent guidance documents highlight a regulatory expectation that prior knowledge should be leveraged to increase overall process understanding (3,4,5,6,7). ICH Q11, in particular, calls for use of prior knowledge from platform processes and products. Information from PC, commercial process development, and other sources related to products and processes with similar motifs (such as monoclonal antibodies) can be used to improve the efficiency and targeting of PC strategies. The processes described herein illustrate a framework for leveraging prior platform knowledge to help focus PC studies only on areas of high risk or uncertainty.

A Case Study

Our prior-knowledge assessment (PKA) process borrowed from many elements used for systematic reviews (8,9,10,11):

- Framing each assessment in the form of a question to be answered or an inference to be proven from prior knowledge

- Identifying reliable information sources based on relevance to the stated question

- Predefining methods for compilation and analysis of data from source information

- Prospectively identifying contributing factors that could affect the outcome of an analysis

- Reviewing data/knowledge sources according to predetermined methods for compilation and analysis.

Each of those elements is described below. We offer a PKA case study from a platform chromatography column step as an example to illustrate the concepts. For proprietary reasons, neither the specific product-quality attributes affected nor the operating parameters are revealed; however, such information is not necessary for illustrative purposes.

Framing the Assessment

The Assessment Question: The primary outcome of PC studies is identification of operational parameter perturbations with significant effects on process performance — with particular emphasis on product quality. The magnitude of an effect may be reported with quantitative data or qualitative information. To frame this assessment, a question would be asked in the same way it would be for describing the purpose of a PC experiment except that in this instance, historical platform data are used.

For our case study, the question had to do with gaining a better understanding of parameters involved in controlling two impurities with the platform chromatography step. We posed it as follows: What operating parameters, when perturbed either to the edge or outside of their normal operational ranges, most frequently affect impurity X and Y levels in the chromatography column pools?

Acronyms Used Herein

Normal operating range (NOR): The operating range for a parameter that is found in manufacturing batch records (typically based on equipment tolerances and capabilities rather than process capability); the range is generally two-sided (± around a set point). “1×, 2×, … NOR” refers to how an operating parameter is set relative to the NOR for a given study. For example, if the pH set point and NOR is 7.5 ± 0.2, then a study testing the pH range of 7.1–7.9 would be a “2×” NOR study.

Process characterization (PC): Experiments (performed primarily at laboratory scale) to demonstrate process robustness and provide data necessary for planning, risk mitigation, and successful execution of process validation

Design of experiments (DoE): Multivariant experiments designed to identify the effects of operating parameter perturbations on process performance; they may be designed to find two-way interactions between operating parameters as well as main effects.

Process impact rating (PIR): An operating parameter rating that is a function of the sensitivity process performance to perturbations of the operating parameter

Prior-knowledge assessment (PKA): An assessment process in which process and product-related issues and questions are addressed using prior knowledge; it involves identification, compilation, and analysis of historical data in such a way that a question can be answered — and, if not, then any knowledge gaps are clearly defined.

Identification of Information Sources

Relevance criteria ensure that only information sources pertinent to the question(s) being addressed are used. Search criteria should be based on these criteria. For some questions, only data from products made with the most recent version(s) of a platform process may be appropriate; for others, products made from earlier versions or even nonplatform processes using similar unit operations may be relevant. In leveraging prior knowledge to help define the PC scope for a new platform molecule, design of experiment (DoE) studies from platform processes are one of the best sources of information. Also of use are development studies in which operating parameters are tested over a broad range before an optimal set point is determined.

Our Example: The question identified above involved two primary relevance criteria. The first was that the reports had to be about the particular chromatography mode that was applied to the platform products. The second criterion was that information had to pertain to the response of impurity X or Y levels to the perturbation of one or more operating parameters. On the basis of those criteria, we included both historical PC studies and development reports. We believed that searching manufacturing data from nonconformances would provide minimal relevant information because the incidence of relevant excursions would be very small. In addition, many of those are transient in nature (e.g., a pH swing or conductivity spike) and therefore not relevant to the question at hand.

Reliability Criteria: To be included in an assessment, information should have some reliability threshold. That will depend on the purpose and scope of the assessment. For example, when leveraging data from reports to calculate control limits or validation acceptance criteria, set a high reliability threshold (e.g., qualified analytical methods, qualified small-scale models, and verification data). However, if your goal is to obtain gene

ral directional information for planning and strategic purposes, then a lower reliability threshold may be used.

Examples of reliability aspects include veracity (approved documents rather than drafts, notebook data, and so on), method reliability (experimental methods and fit-for-use, qualified, or validated methods), and study design (statistical rigor, appropriate controls, and small-scale model qualification).

Our Example: Information from our assessment would be used to develop new strategies for PC based on our understanding of our platform knowledge. Because we would not be using historical data to directly generate new information, our only reliability criterion was that methods used for measuring impurities X and Y were fit-for-use.

Searches: We recommend both electronic and manual searches to ensure that a comprehensive set of reports will be available for your assessment. Subject-matter–expert queries can help identify relevant information that is older or more difficult to locate.

Our Example: We used electronic searches to identify reports that met our relevance and reliability criteria. Those searches revealed a list of reports covering 14 platform products and processes. Subject-matter–expert queries identified no additional sources.

Consolidation and Analysis of Data

Assessment of Operating Parameter Main Effects: Information used in PKAs can come from a number of sources spanning several years, multiple departments, and different phases of development. Some parameters might not include product-specific data if they have already been determined to be low-risk through prior or general scientific knowledge. Process-optimization experiments may focus on only a few operating parameters tested over broad ranges. Robustness and PC studies test many operating parameters over a fixed and generally more limited range. One-off experiments typically examine just one or two operating parameters over multiple ranges.

Data may be reported in different ways: continuously or numerically (e.g., effect-size estimates from DoEs); ordinally, with the outcome being one of several ordered categories (e.g., “practically significant,” “statistically significant,” or “no effect”); and binarily (e.g., “significant effect” or “no effect” and yes–no). Various combinations of those modes may be found in some reports, along with directional and qualitative information (e.g., negative and positive correlations or quadratic effects).

The process impact rating (PIR) score allows for compilation all types of results into a single ordinal data set. Information used includes both the process-performance effect magnitude (a qualitative assessment) and the operational perturbation magnitude relative to a normal operating range (NOR). The former can fall into three main categories:

- “Large effect” (a performance effect larger than the expected process variability that may potentially exceed validation or in-process control limits)

- “Small effect” (statistically significant effect (p < 0.05) or trend that appears significant but has little overall process impact, no greater than normal process variability)

- “No effect” (p > 0.05 or determined not to be significant by a report’s author(s)).

The magnitude of perturbation is presented relative to NOR, which is the operating range used in manufacturing batch records and generally considered a function of equipment tolerances and capabilities rather than process capability. Combining that with the process performance effect magnitude gives an overall process PIR score for an operational parameter in a given study (Figure 1).

Operating parameters with a PIR of “4” will have the greatest impact on a given product quality attribute or performance parameter. Operating parameters with a score of “3” have a relatively small but measureable impact. Parameters with “1” or “2” ratings are considered to have no effect. But those scored with a “1” have been subjected a greater perturbation (>2× NOR) than those with a “2,” which provides greater assurance of process robustness and warrants the lower PIR rating.

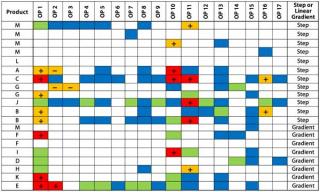

Our Example: Among the reports we included in our assessment, results were reported as continuous data in most cases, but in several instances they were reported as binary or ordinal data types. Figures 2 and 3 show process-impact compilation tables for process impurities X and Y, respectively. Operating parameters 1, 10, and 11 had the most frequent PIR scores >2 for both impurities. Operating parameters 13 and 14 had a relatively high frequency of PIR scores >2 for impurity X only. Operating parameter 2 also had a relatively high incidence of PIR scores >2 among less overall information for it. None of the other parameters had PIR scores >3, and most had a preponderance of 1 scores. With the exception of operating parameter 2, all perturbation responses were consistently in the same direction for a given operating parameter. In addition, the direction of the responses for operating parameters 1, 10, and 11 were the same for impurities X and Y, which indicated that the same set of “worst-case” operating conditions could be used for both.

Several operating parameters were examined in just a few instances (e.g., operating parameter 6). In most of these cases, those parameters were already eliminated from study designs based on prior risk assessments and general scientific knowledge.

Assessing Two-Way Interactions and Quadratic Effects: Just as the most important main effects are observed repeatedly across platform processes, meanin

gful two-way interactions and quadratic (nonlinear responses) are likely to recur across multiple processes. An isolated observation, particularly one with a low performance-effect magnitude, is unlikely to have any true mechanistic control of the process. That rule is consistent with the general statistical objective of creating empirical models that are as simple and parsimonious as possible (12, 13). Identification of practically significant, recurring, two-way interactions and quadratics will enable investigators to focus on those that contribute to process control in a meaningful way.

Our Example: Ten reports were powered to examine two-way interactions and quadratics. The following criteria had to be met for those to have meaningful process control: statistically significant (p < 0.05), significant main effect from one parameter, effect size ≥25% that of the largest main effect, and observed in more than one study.

For Impurity X, 31 two-way interactions were reported and 10 for impurity Y. Of those, we found three recurring interactions for X and one for Y. None of those recurring interactions had effect sizes approaching 25% of the dominant main effects contained in each interaction. We observed no significant recurring quadratic effects across all studies. So two-way interactions and quadratics do not appear to play a practically significant role in the control of either impurity.

Assessing Raw-Material Variability: Along with operating-parameter perturbations, differences in raw materials can also affect process performance. Vendor-to-vendor variability, lot-to-lot variability, and different raw-material handling or treatment practices all can contribute to overall process performance. One main consideration is the raw-material attribute variability among lots tested, particularly for those attributes that can affect process performance (e.g., chromatography media ligand density). The overall magnitude of raw-material “perturbation” for a given study would be a function of raw-material–attribute (or specification) range coverage, the total number of raw-material lots tested, different raw-material handling or treatment procedures, and any other potential sources of variability.

Our Example: We examined the effect of chromatography media ligand density for two different products. Eight lots with 75% ligand-density–specification range coverage were examined for one product, and three lots with 40% coverage were studied for the second. We found no differences in impurity levels attained or any other performance parameters among the lots tested. That strongly suggests that when varied within the specified range, ligand density does not significantly affect clearance of either impurity X or Y. But because all operating parameters were run at set-point in both studies, it is still slightly possible that ligand density could affect impurity levels when operating parameters are perturbed.

Assessing Contributing Factors: For a given process step, a number of contributing factors could affect the responses to operating-parameter perturbations. These may include unique process-design elements (e.g., chromatography elution mode), different raw-material characteristics, and product physicochemical and structural properties (e.g., viscosity, hydrophobicity, and mechanical and thermal stability). The latter may be particularly true for drug-product operations that involve mechanical, physicochemical, or thermal stress. Identification of such contributing factors may not only uncover previously unknown process information, it may also help identify instances in which greater caution should be applied to the use of platform knowledge for new product candidates. Potential contributing factors can be identified by sorting a PIR data table by a given contributing factor.

Our Example: We identified two factors with the potential to influence the perturbation response for impurity Y: product net charge (neutral or basic) and elution mode (gradient or step). Figures 4 and 5 sort the PIR tables based on those two factors. We broke down the net-charge contribution according to products with a pI >7.5 and those with a pI

Review

A review process captures report data and associated PIR results. Information captured should include perturbation responses (either qualitative or quantitative), with actual ranges tested relative to NOR and a data reference (page number, figure or table number, and so on). Instances in which no data exist or results are inconclusive also should be noted. Figure 6 is a data-capture spreadsheet for a hypothetical unit operation.

Because of the subjective nature of some analyses — such as distinguishing between large and small performance effects — we recommend involving more than one reviewer. When reviewers disagree on a PIR score, either use the most conservative rating (higher of the two PIR scores) or categorize such data as “inconclusive.” That will keep potentially important parameters from being overlooked.

Implications for PC Study Design

PKAs from platform processes are but one input to planning for PC. Prior knowledge can complement and substantiate information from product-specific development studies and subject-matter expertise. For example, several operating parame

ters in our case study were the subject of no more than two or three studies with relevant data; however, general scientific knowledge had already been used to eliminate those parameters from experimental work.

DoEs are well-established tools for process development and PC (1, 13, 14). They provide an efficient and mathematically robust way to identify operating parameters and interactions that most affect process performance. By building on a foundation of prior platform knowledge, including information from DoEs and other experimental approaches, we can prospectively identify operating conditions with the greatest potential to negatively affect product quality and process performance. Use of such knowledge can obviate the need for large, multifactorial studies and allow for a more direct way to test the boundaries of process performance by setting operating parameters at their worst-case conditions for each performance parameter.

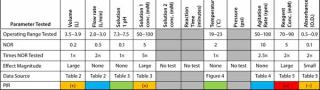

For the unit operation we described here, a full-response surface experimental design including all two-way interactions and quadratics would require more than 100 experiments (13). Based on findings from our assessment, investigators could focus on a narrowed set of operating conditions to determine main effect magnitudes and an even narrower set of conditions that give the worst-case performance for removal of each impurity in future platform processes. Table 1 shows a proposed set of experimental conditions. Operating parameters not shown would be run at their set points and are determined not to significantly affect impurity removal based on prior knowledge.

Table 1: Proposed experimental operating conditions for process characterization

Experiments 1–7 represent an experimental design that would estimate the effect magnitude for each operating parameter known to affect impurity removal. Because there were no significant recurring two-way interactions, the design includes main effects only. Experiments 8–10 (highlighted in blue) provide the control and worst-case set of operating conditions for removal of both impurities. Testing that narrow set of operating conditions with multiple lots of raw materials, multiple or worst-case feed streams, and other discrete variables would help identify sources of process variability beyond those found by manipulation of operating parameters alone. This approach would not only give investigators a better overall understanding of process variability, but it may also save time and resources.

PKAs can be used to help define the current state of platform process understanding. Taken together with development studies, manufacturing campaign data, and other product-specific sources of information, they help focus PC activities by identifying high-risk parameters and shedding light on areas that warrant further study. PKAs also can reduce the cycle time for development studies by enabling development scientists to focus on parameters that most affect process performance. Finally, using prior platform knowledge allows investigators to rigorously assess process performance at worst-case operating conditions while also testing the effects of other sources of process variability.

Author Details

Corresponding author James E. Seely is director of knowledge management (1-303-401-1229 or 1-303-859-2747; jseely@amgen.com or jrseely@msn.com), and Roger A. Hart is a scientific director in product and process engineering. Both authors are at Amgen Colorado, 4000 Nelson Road, Longmont, CO 80503.

REFERENCES